In recent years, artificial intelligence (AI) has made significant advancements in various fields, revolutionizing the way we live, work, and communicate. One remarkable achievement in AI is the development of language models like OpenAI’s ChatGPT, which can generate human-like text and engage in conversations. However, along with the unprecedented capabilities of these models comes the challenge of ensuring responsible and ethical use of AI.

As the use of AI language models becomes widespread, so does the need to address potential misuse. In particular, there is a growing concern about the manipulation of AI-generated text, such as spreading misinformation, promoting hate speech, or bypassing content filters.

OpenAI’s prompt engineering techniques and efforts to reduce biases have allowed ChatGPT to filter out certain types of malicious prompts. However, the existence of systems designed to bypass such filters raises questions about the effectiveness of the detectors.

Table of Contents

The Challenge of GPT Detectors

Detecting and mitigating harmful or inappropriate content generated by AI models is a complex task. OpenAI, as the creator of ChatGPT, has put substantial effort into developing detectors that identify and block prompts leading to problematic outputs. These detectors aim to prevent the propagation of misinformation, hate speech, and other undesirable content. But just as AI models continue to improve, so do the attempts to bypass these safeguards.

Introducing Bypass GPT: A Challenge to Existing Detectors

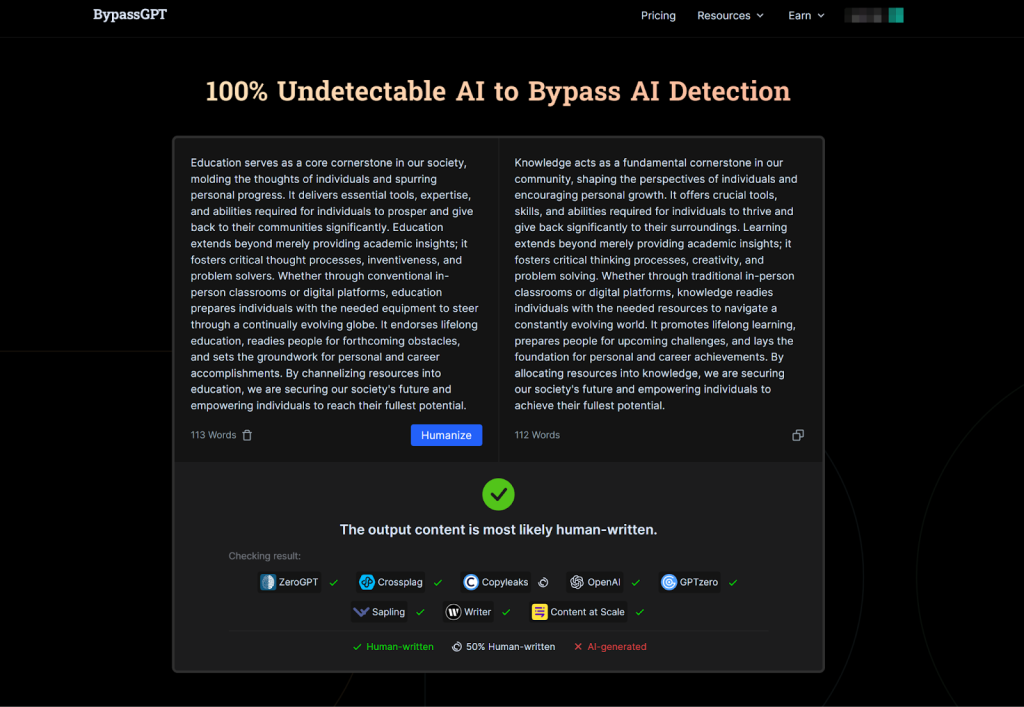

One particular system that has gained attention in the AI community is Bypass GPT, developed by a group of researchers. Bypass GPT demonstrates the challenges of designing effective detectors to prevent malicious uses of AI models. By exploiting weaknesses in the detection mechanisms, it allows users to generate AI-generated text that can potentially bypass content filters and evade detection.

Understanding the Bypass GPT Approach

Bypass GPT leverages a technique known as “lexical manipulation” to deceive the detection systems. It takes advantage of ChatGPT’s sensitivity to specific phrases or patterns to avoid triggering the warning mechanisms that would flag potentially harmful content. By carefully selecting the sequence of words and the context, Bypass GPT seeks to bypass content filters and produce text that might otherwise be blocked.

The Bypass GPT creators present their system as a call for improved detection mechanisms. They argue that as AI models become more powerful, detectors need to evolve as well, constantly adapting to new evasion techniques. This ongoing battle between AI models and detectors underscores the need for robust and continuously updated safeguards.

Here is a detailed guide on how to make ChatGPT undetectable.

The Implications and Ethical Considerations

The development and utilization of systems like Bypass GPT raise ethical questions that require careful consideration. On one hand, the research behind Bypass GPT can provide valuable insights into the limitations of current detection mechanisms and inform the development of more robust safeguards. It serves as a reminder that we must stay ahead of those seeking to misuse AI-generated content.

However, the potential for abuse and the creation of tools to bypass content filters also highlight the responsibility of AI developers and organizations. It prompts a reconsideration of the delicate balance between enabling innovation and ensuring the responsible use of AI. Striking this balance requires ongoing research, collaboration, and transparency within the AI community, as well as active involvement from users to report and address emerging threats.

The Role of Humanizing AI Text

An important aspect of the conversation around AI-generated content is the need to “humanize” the language models. Humanizing AI text refers to the process of bridging the gap between machine-generated text and human-generated text in terms of nuance, context, and moral judgment. By incorporating human-like reasoning and ethical considerations into AI models, we can reduce the likelihood of misinterpretation and misuse.

Works in the field of humanizing AI text, such as the efforts made by Bypass GPT, not only aid in the development of more effective detectors but also contribute to the responsible deployment of AI technologies. When AI models understand context, recognize biases, and exhibit a better understanding of societal norms, they can offer more reliable and responsible outputs.

Moving Forward: Challenges and Opportunities

The emergence of tools like Bypass GPT shines a light on the evolving conversation surrounding the responsible use of AI-generated text. As AI models continue to improve, so must the detectors that safeguard against malicious misuse. Organizations like OpenAI face the challenge of staying ahead of potential threats while maintaining the principles of openness, collaboration, and transparency.

Researchers, policymakers, and AI developers have crucial roles to play in shaping the landscape of AI technology. Strengthening research into detection mechanisms and exploring innovative approaches to humanize AI text are avenues that hold promise. Close collaboration between the AI community, including developers, researchers, and users, is essential to address the challenges associated with AI-generated content and ensure the responsible use of this powerful technology.

While the development of tools such as Bypass GPT may appear concerning, they play a vital role in highlighting the need for continuous improvement and adaptation. By learning from these challenges, we can foster a safer and more responsible AI ecosystem, where AI-generated content serves as a tool to enhance our lives without compromising on vital ethical considerations.